文| 司晓 腾讯研究院院长

过去几年,随着技术的发展,各种包含人工智能技术的产品走入大众生活的方方面面。从新闻客户端中越来越“懂你”的信息流,到开始尝试在封闭道路上自动驾驶的汽车。

但是,人工智能这个“仆人”真的忠诚么?又或者说,人工智能只需要遵守忠诚一条伦理么?

当越来越多的人工智能承担了原本由人类承担的工作,人工智能伦理问题成为了技术行业、监管机构和大众最为关注的问题。

2018年12月3日,第七届北大-斯坦福互联网法律与公共政策论坛上,腾讯研究院院长司晓发表了以“为人工智能建立伦理框架”为主题的分享。

司晓重申了腾讯董事会主席马化腾在2018年世界人工智能大会上提到的人工智能的“四可”理念,即未来人工智能是应当做到“可知”、“可控”、“可用”和“可靠”。在本次会议上,他将“四可”翻译为“ARCC”(Available, Reliable, Comprehensible, and Controllable,读作ark),方舟让大家一下就想到保存人类生命火种的诺亚方舟,虽然他并不认同人工智能会威胁人类的生存,但以方舟的寓意来类比伦理对人工智能的重要性非常合适,他同时详细解释了“四可”背后的涵义。

司晓谈到,腾讯的愿景是通过“四可”这样的一个道德框架开始,去帮助AI开发者和他们的产品赢得公众的信任。一个新技术的诞生本身无关好坏,但通过伦理法律和各种制度设计确保这些技术能够成为“好的技术”是我们的责任。

—— 以下为演讲全文 ——

尊敬的各位领导,学者及来宾,大家下午好。

从刚才的嘉宾分享中,我们很高兴地了解到,已经有人认真考虑过机器与它的人类创造者对抗的可能性。

毫无疑问,知名作家艾萨克·阿西莫夫(Isaac Asimov)提出的“机器人三定律”已不能适应当今的人工智能发展。我们需要更加严肃、具体的方式来指导技术发展,并保护全人类的福祉。因此,我想从伦理的视角分享我对人工智能发展的一些看法——即“为人工智能建立伦理框架”。

当前的人工智能发展浪潮很大程度上受益于我们在使用互联网过程中积累的大量数据。

如今,互联网连接了世界一半以上的人口,仅在中国便有8亿网民。但互联网在带来便利与效率的同时,也带来了风险,尤其是在大数据和人工智能大范围驱动日常生活的时代。算法决定着我们阅读的内容,决定着我们的目的地以及出行方式,决定着我们所听的音乐,决定着我们所购买的物品及其价格。自动驾驶汽车、自动癌症诊断和机器人写作等新事物正前所未有地接近大规模商业应用。

因此,在某种程度上,将数据比喻为“新石油”,将人工智能比喻为“新钻头”并无不妥,而故障算法则可被比喻为由此产生的“新污染”。

但要注意的是,算法故障不等于有恶意。因为善意并不能保证算法不导致任何法律、伦理和社会问题。

在人工智能领域,我们有相当多这样的例子,例如由于技术意外、缺乏对问题的预见或技术上难以监控监督、责任分散导致的隐私侵犯、算法偏见和滥用等。此外,一些研究人员开始担心智能机器将取代人类劳动力,从而导致社会失业率上升。

很多人认为人工智能伦理还是一个科幻议题,但其实麻烦已经迫在眉睫。

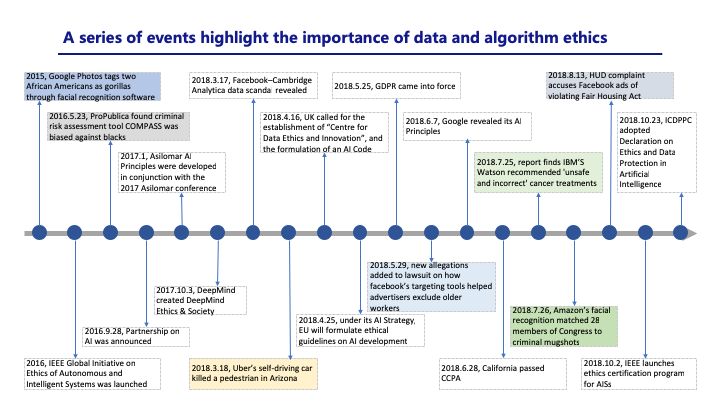

最近几年,一系列涉及AI不端行为的新闻频频出现在新闻客户端;面部识别应用程序将非裔美国人标记为大猩猩,将国会议员标记为刑事罪犯;美国法院使用的风险评估工具对非裔美国人有偏见;优步的自动驾驶汽车在亚利桑那州撞死了一名行人;Facebook和其他大公司因歧视性广告行为被起诉;以及上一位学者提到的以杀戮为目的的人工智能技术。

而究竟如何为人工智能制定伦理,是全人类都没有遇到过的问题,我们正在向无人区航行,需要原则和规则作为指南针,来指导这次伟大的探险,而技术伦理则是这套原则和规则的核心。

在过去几十年中,对技术伦理的研究经历了三个阶段。第一阶段的研究主要集中在计算机上。在此期间,各国颁布了大量有关计算机使用、欺诈、犯罪和滥用的伦理准则和法律,大多数规则今天也仍然适用。第二阶段则集中在互联网领域,创建了规范信息创建、保护、传播和预防滥用的伦理原则和法律。现在,这个研究领域已经悄然进入了一个全新的第三阶段,我将其称为“数据和算法伦理”。未来,我们将需要有关人工智能开发和利用的伦理框架和法律。

当前,一些政府部门和行业协会已开始尝试建立这样的伦理框架,显著的例子包括阿西洛马人工智能原则,以及IEEE的伦理标准和认证计划。

今年九月,腾讯董事会主席兼首席执行官马化腾在上海“2018世界人工智能大会”上提出人工智能的“四可”理念,即未来人工智能是应当做到“可知”、“可控”、“可用”和“可靠”。我将“四可”翻译为“ARCC”(Available, Reliable, Comprehensible, and Controllable, 读作ark)。

马化腾先生的这项呼吁为进一步发展人工智能伦理框架奠定了基础。正如传说中数千年前拯救人类文明的诺亚方舟一样,人工智能领域的ARCC也将在未来几千年确保人类与机器之间友好、和谐的关系。因此,这四项原则值得我们深入探讨。

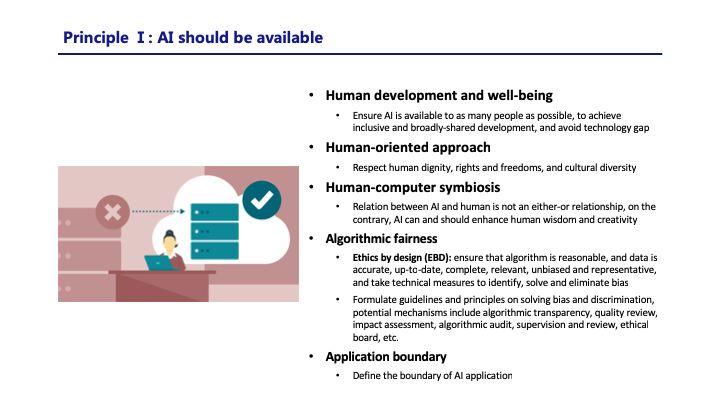

首先是可用(Available)。这一原则要求尽可能多的人可以获取、利用人工智能,而不是为少数人所专享。我们已经习惯于智能手机、互联网、应用程序带来的便利,而往往忘了还有半个世界被隔绝在这场数字变革之外。人工智能的进步应该解决这个问题,而不是加剧这个问题。我们应该让欠发达地区的居民,以及老人、残障人士回到数字世界中来,而不是将数字鸿沟视为既定事实。我们需要的人工智能应当是是包容的、可被广泛共享的技术,并将人类的共同福祉作为其唯一发展目的。只有这样,我们才能确定人工智能不会让部分人的利益凌驾于其他人之上。

以最近医疗机器人的发展为例,腾讯医疗AI实验室开发的“觅影”目前正与数百家地区医院的放射科医生合作。这种癌症预筛查系统目前已经审查了数十万张医学影像,鉴别出数十万个疑似高风险病例,并将这些案例转交给人类专家进行进一步判断。这项技术使医生免于繁重的影像判断工作,从而有更多地时间来照顾病人。让机器和人类各司其职,在这种情况下,医生与看似危及其工作的机器和平相处。

此外,可用的人工智能还应当是公平的。完全遵循理性的机器应该是公正的,没有人类的情绪化、偏见等弱点。但机器的公正并不是想当然存在的。最近发生的事件,如微软开发的聊天机器人使用粗俗语言等,表明当给人工智能“喂养”了不准确、过时、不完整或有偏见的数据时,机器也会犯和人类一样错误。对此,必须强调“经由设计的伦理”(ethics by design)理念,也就是在人工智能的开发过程中就要仔细识别、解决和消除偏见。可喜的是,政府部门和互联网行业组织等已经在制定解决算法偏见和歧视的指导方针和原则。像谷歌和微软这样的大型科技公司也已经建立了自己的内部伦理委员会来指导其人工智能研究。

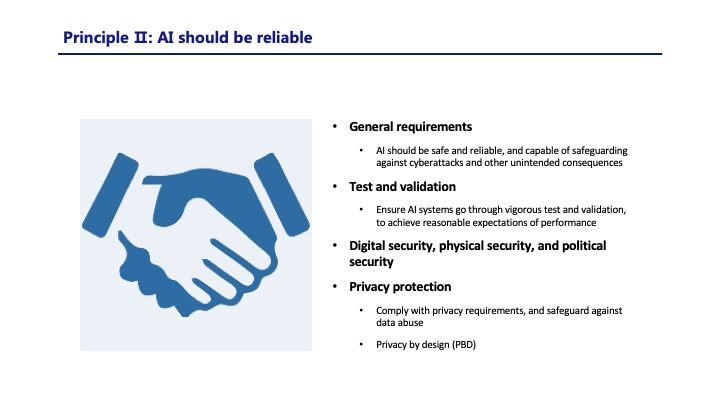

其次是可靠(reliable)。人工智能的应用如机器人等已进入普通家庭,我们需要这些人工智能是安全的、可靠的,能够抵御网络攻击和其他事故。以自动驾驶汽车为例,腾讯目前正在开发3级自动驾驶系统,并已获得在深圳市进行道路测试的许可。而在获得道路测试许可前,我们的自动驾驶汽车已在封闭场地进行了累计超过一千公里的测试。但由于相关的认证标准和法律规定仍有待确定,目前道路上还没有投入商用的真正的自动驾驶汽车。

此外,人工智能的可靠性还应当确保数字安全、物理安全和政治安全,尤其是对用户隐私的保护。由于人工智能研发需要收集个人数据以训练其人工智能系统,研发者在这一过程中应当遵守隐私要求,通过一定的设计保护用户的隐私,并防止数据滥用。

再次是可知(comprehensible)。深度学习等人工智能方法的流行,使得底层的技术细节越发隐秘,可谓隐藏在一个“黑盒”当中。深度神经网络的输入和输出之间还有很多隐藏层,这使得研发人员都可能难以理解其系统。因此,当人工智能算法导致车祸时,调查其技术原因可能需要花费数年时间。

幸运的是,AI行业已经开始对可理解的AI模型进行研究。算法透明是实现可理解人工智能的一种方式。虽然用户可能不大关心产品背后的算法,但监管机构需要深入了解技术细节以便进行监督。无论如何,就AI系统直接作出或辅助做出的决策,向用户提供易于理解的信息和解释,都是值得鼓励的。

为了建立可知的人工智能,应该保证和鼓励公众参与和个人权利的行使。人工智能开发不应只是企业的秘密行为。作为最终用户的公众可以提供有价值的反馈,有权质疑可能造成伤害或难堪的机器决策,这将有助于开发更高质量的人工智能。

此外,可知的人工智能要求企业提供必要的信息,也即科技公司应当向其用户提供有关AI系统的目的、功能、限制和影响的足够信息。

最后但同样重要的原则——可控(controllable)——以确保人类始终处于主导地位。从数千年前的文明曙光乍现,到今时今日的技术腾飞,人类始终控制着自己的造物。AI或是任何其他技术都不应当成为例外。只有确保机器的可控性,人类才能在机器出差错、损害人类利益时,及时阻止事态恶化。只有严格遵循可控原则,我们才能避免霍金和马斯克等名人所描绘的科幻式的噩梦。

每一次创新都会带来风险。但是,我们不应该让这些担忧和恐惧阻止我们通过新技术迈向更好的未来。我们应该做的是确保AI的好处远远超过潜在的风险,制定并采取适当的预防措施,以防范潜在的风险。

就目前而言,人们还不信任人工智能。我们经常能看到批评自动驾驶汽车不安全、过滤系统不公平、推荐算法限制选择、定价机器人导致更高价格的声音。这种根深蒂固的怀疑来源于信息的匮乏,我们大多数人要么不关心,要么没有足够的知识来理解人工智能。对此我们应该如何行动?

我的提议是,以伦理原则为起点,建立一套规则体系,以帮助人工智能开发人员及其产品赢得公众的信任。

规则体系的最左端,是软法性质的规则,包括非强制性的社会习惯、伦理标准和自我行为规范等,以及我所说的伦理原则。国际上,谷歌、微软和其他一些大公司都已经提出了它们的AI原则,且阿西洛马人工智能原则和IEEE的AI伦理项目都得到了很高评价。

随着规则体系的坐标右移,我们有强制性的规则,例如标准和法律规范。我们今年发布了一份关于自动驾驶汽车的政策研究报告,发现目前许多国家正在研究制定鼓励和规范自动驾驶汽车的法律规则。可以预见未来各国也将制定关于人工智能的新法律。

随着规则体系的坐标继续右移,我们可以通过刑法惩罚恶意使用人工智能的行为。而在规则体系的最右端,则是具有最大约束范围的国际法。一些国际学者正在积极推动联合国提出关于禁止使用致命自动化武器的公约,就像禁止或限制使用某些常规武器的公约一样。

其实无论是核技术还是人工智能,技术本身是中性的,既非善也非恶。赋予技术价值并使它们变得美好是我们人类的责任。

我的发言到此为止,感谢聆听!

—— 以下为英文版 ——

Towards an Ethical Framework for Artificial Intelligence

Jason Si

Thank you, Professor Vogl, for the warm introduction. Thank you, Angel, for this interesting piece about lethal autonomous weapons.

It is good to know someone had carefully considered the possibility of man-made machines to go against their creators. For sure, Isaac Asimov's "Three Laws of Robotics" could no longer apply here. We need something serious and more specific to guard our limitless ambitions, and to guard humanity.

I would like to share some of my thoughts about the recent development of Artificial Intelligence from an ethicology perspective. That is, “Towards an Ethical Framework for Artificial Intelligence”.

The recent hype of AI was largely built upon the tremendous amount of data we piled up through internet. The internet connects more than half of the world population nowadays. And there are 800 million netizens living in China’s cyberspace alone.

Along with the convenience and efficiency brought by internet, comes risks. This is especially true in an age where a good deal of our daily life is driven by big data and artificial intelligence. Algorithms have been widely used to determine what we read, where we go and how we get there, what music we listen to, and what we buy at what price. Self-driving cars, automatic cancer diagnosis, and machine writing have never been so close to large scale commercial application.

It is therefore, to a certain extent, proper to call data the new oil, and AI the new drill. Following this line of analogue, the malfunctioned algorithms the new carbon dioxide emission.

Note that malfunction does not equal to malevolent. A good intent does not guarantee free of legal, ethical and social troubles. As to AI, we have observed a fairly large amount of such troubles, namely, unintended behaviors, lack of foresight, difficult to monitor and supervise, distributed liability, privacy violation, algorithmic bias and abuse. Moreover, some researchers started to worry about the potential unemployment rate hike cause by smart machines that doom to replace human labor.

Troubles are looming.

A series of AI misbehaves had occupied media headlines lately. A facial recognition app tagged African Americans as gorillas; another one matched a US Congressman with a criminal warrant. Risks assessment tool used by US courts was alleged as biased against African Americans. More seriously, Uber’s self-driving car killed a pedestrian in Arizona. Facebook and other big companies were sued for discriminative advertising practices. And we have just learned from Angel that some AI empowered machines are aimed to kill.

We are marching into unmanned territories. We need rules and principles as compass to orient this great voyage. The tradition of technology ethics is for sure at the core of this set of rules and principles.

The study of technology ethics has gone through three phases over the past several decades. The first phase of studies focused on computers. During this period, ethical codes and laws concerning computer use, fraud, crime and abuse were enacted. Most of them still apply today.

The second phase came with the internet, where ethical norms and laws concerning information’s creation, protection, dissemination and prevention of abuse were established.

Now, this research field has quietly entering into a new third phase, which I called “the data and algorithm ethics”. New ethical framework and laws concerning the development and application of AI will be gravely in need in the coming years.

Here we should acknowledge some of the early stage efforts to build such a framework by some governmental subsidiaries and industrial collaborations. Notable examples include Asilomar AI Principles, and IEEE’s ethics standards and certification program.

In September of this year, when speaking at Shanghai World AI Conference, Mr. Pony Ma, the Chair and CEO of Tencent, challenged the high-tech industry to build available, reliable, comprehensible, and controllable AI systems.

Available, Reliable, Comprehensible, and Controllable. ARCC, or ar-k.

It seems Pony’s call had laid out a foundation for further development of an AI ethical framework. To some extent, like the Ark of Noah saved human civilization thousands of years ago, the ARCC for AI may secure a friendly and healthy relationship between humanity and machinery in the thousands of years to come.

So that, it is worth to study these four terms carefully.

Available. AI should be available to the mass not the few. We are so used to the benefits brought by the smartphones, internet, apps, etc. and often than not, forget the fact that there is still a half world are cut off from this digital revolution.

The advances of AI should fix this problem, not exacerbate it. We are to bring residence of in undeveloped areas, the elderly and those disabled back to this digital world, rather than take the digital divide as a failure admitted. An AI in need, is an AI that is inclusive and broadly-shared. The wellbeing of humanity in whole should be the sole purpose of AI development. Only by then, we could be sure that AI will not advance the interests of some human over that of the rests.

Take the recent development of medical bots as an example. Like the “Looking for Shadow” (miying) developed Tencent’s AI team, which is currently working with radiologists in hundreds of local hospitals. This cancer pre-screening system had by now attend billions of medical images and detected hundreds of thousands high-risk cases. This bot then past these cases to experts. By doing so, it freed doctors from daily labor of watching pictures and spare them with more time to attend patients. Let the machine do what machine is good at and human do what human is good at. In this case, doctors are at peace with the seemingly job-threatening machines.

Moreover, an available AI is a fair AI. A machine completely following rationality should be impartial and free of humane weakness such as being emotional or prejudicial. This should not be taken for granted, however. Recent incidences, like the vulgar language used by a Chat bot developed by Microsoft, had shown us that AI can go seriously wrong when fed by inaccurate, out-of-time, uncomplete, or human-flawed data. An Ethics by Design approach is preferred here. That is, to carefully identify, solve and eliminate bias during the process of AI developing.

Regulatory bodies, like government branches and internet industry organizations are already formulating guidelines and principles on solving bias and discrimination. Big techs like Google and Microsoft have already set up their own internal ethical boards to guild their AI research.

Reliable.

Since AI had been put into millions of households already, we need them to be safe, reliable, and capable of safeguarding against cyberattacks and other accidents.

Take autonomous driving cars as an example. Tencent currently is developing a level 3 autonomous driving system and has obtained the license to test our self-driving cars in certain public roads in Shenzhen. But before getting the test license, its self-driving cars have tested in closed site for more than a thousand kilometers. Today, no real self-driving car is being commercially used on our road, because related standards and regulations concerning its certification are still to be established.

Besides, for AI to be reliable, it should ensure digital security, physical security, and political security, especially privacy protection. Because AI companies collect personal data to train their AI systems. Therefore, they should comply with privacy requirements, protect privacy by design, and safeguard against data abuse.

Comprehensible.

Easy to say, hard to do. The popularity of AI developing methods such as deep learning is increasingly sunk the detailed underlying mechanisms into a black-box. These hidden layers between input and output of a deep neutral network make it impenetrable even for its developers. As a result, in case of a car accident guiding by an algorithm, it may take years for us to find the clue that leads to the accident.

Fortunately, the AI industry have already done some researches on explainable AI models. Algorithmic transparency is one way to achieve comprehensible AI. While users may not care about the algorithm behind a product, regulators need deep knowledge of technical details to supervise. Nonetheless, a good practice would be to provide users with easy-to-understand information and explanation in respect of decisions assisted or made by AI systems.

To develop a comprehensible AI, public engagement and exercise of individuals’ rights should be guaranteed and encouraged. AI development should not be a secret undertaking by commercial companies. The public as end users may provide valuable feedbacks which is critical for the development of a high-quality AI. It is the right for individuals to challenge bot-decisions that may cause harms or embarrassment to their owners.

This argument then goes back to the requirement of information releasing of AI developer to the public. That is, requirements to tech companies to provide their customers with enough information concerning AI system’s purpose, function, limitation, and impact.

Controllable.

The last but not the least principle to make sure that we, human beings, are in charge, ALWAYS.

From the dawn of civilization, we are in charge of all our inventions, till now. AI must not be an exception. Actually, no technologies should be. This is the precondition for us to stop the humming machine whenever something goes wrong and damaging the interests of us.

Only by strictly following the controllable principle, we can avoid the Si-Fi style nightmare pictured by prominent figures like Stephen Hawking and Elon Musk. With every innovation, comes risks. But we should not let the worries about humanity extinction caused by some artificial general intelligence or super bots to prevent us from pursuing a better future with new technologies. What we should do, is to make sure that the benefits of AI substantially outweigh the potential risks. What we should also do, is to take the rein and set up appropriate precautionary measures to safeguard against the foresaw risks.

For now, people often trust a stranger more than an AI without a good reason.

We frequently come across comments and words that self-driving cars are unsafe, filters are unfair, recommendation algorithms restrict our choices, and pricing bots charge us more. This deeply embedded suspicion rooted in information shortage, since most of us either don’t care or don’t have the necessary knowledge to understand an AI.

What to do?

I would like to propose a spectrum of rules started from an ethical framework that may help AI developers and their products to earn the trust they deserve.

On the far left, we have light-touch rules, such as social conventions, moral rules, and self-regulation. The ethical framework I mentioned serves as this kind of rules. At the international level, Google, Microsoft and other big companies have come up with their AI principles, and Asilomar AI Principles and IEEE’s AI ethics program are well praised.

As we move to the right, there are mandatory and binding rules, such as standards and regulations. We wrote a policy report on self-driving cars this year and find that many countries are making laws to encourage and regulation self-driving cars. In the future, there will be new laws for AI.

further along, there is criminal law to punish bad actors for malicious use of AI. To the extreme right, there are international law. For example, some international scholars have been pushing the United Nations to come up with a convention on lethal autonomous weapons, just like the Convention on Prohibitions or Restrictions on the Use of Certain Conventional Weapons.

A new born technology, given it a controlled nuclear reaction or a humanoid bot, is neither good or bad. It is OUR duty, to give it value and make them to be good.

This ends my remarks.

Thank you for listening.

— — | END | — —返回搜狐,查看更多

责任编辑: